In the modern era, Artificial Intelligence (AI) has swiftly become a dominant force, influencing a myriad of sectors, from healthcare and finance to entertainment and transportation. As its influence continues to expand, it brings forth questions that challenge the very fabric of our societal and moral values. It’s not just about what AI can do, but more pertinently, what it should do. This introduction seeks to shed light on the importance of ethics and governance in guiding the trajectory of AI, ensuring its benefits are harnessed while mitigating potential risks.

| Sector | % Growth 2018 | % Growth 2019 | % Growth 2020 | % Growth 2021 | % Growth 2022 | % Growth 2023 |

|---|---|---|---|---|---|---|

| Healthcare | 15% | 18% | 20% | 22% | 24% | 27% |

| Finance | 10% | 12% | 15% | 17% | 19% | 22% |

| Entertainment | 9% | 10% | 11% | 13% | 15% | 17% |

| Transportation | 8% | 9% | 10% | 11% | 12% | 14% |

AI’s rapid integration into our daily lives holds the promise of revolutionary advancements. Machine learning models can diagnose diseases earlier than human doctors, financial algorithms can predict market shifts with astonishing accuracy, and virtual assistants make our daily routines more efficient. Yet, with such advancements come challenges.

A key aspect of these challenges lies in the realm of ethics. Consider the news stories of AI systems unintentionally exhibiting racial or gender biases. Or think about deepfakes—AI-generated media that can convincingly replace reality, posing genuine concerns about misinformation. And then, there are self-driving cars. While they promise safer roads, they also present ethical dilemmas like the infamous “trolley problem.”

Furthermore, governance comes into play, acting as a regulatory compass to navigate the intricate ethical landscape of AI. But what exactly is the role of governance? And how does it differ across nations, each with its unique socio-cultural and legal contexts?

Understanding AI Ethics

At its core, ethics refers to the moral principles that guide our decisions and actions. When we apply this to AI, we’re examining the values and considerations that inform how AI systems are designed, implemented, and used in various contexts. But why is AI ethics so crucial, and what sets it apart from general ethical considerations?

The Uniqueness of AI Ethics

- Scalability: AI models, once trained, can be deployed countless times without additional effort. A single unethical decision in the design phase can thus have widespread implications.

- Opacity: Many AI systems, particularly deep learning models, function as ‘black boxes’, making their decision-making processes difficult to decipher. This lack of transparency compounds ethical challenges.

- Autonomy: AI systems can make decisions and take actions with limited or no human intervention, raising concerns about accountability and control.

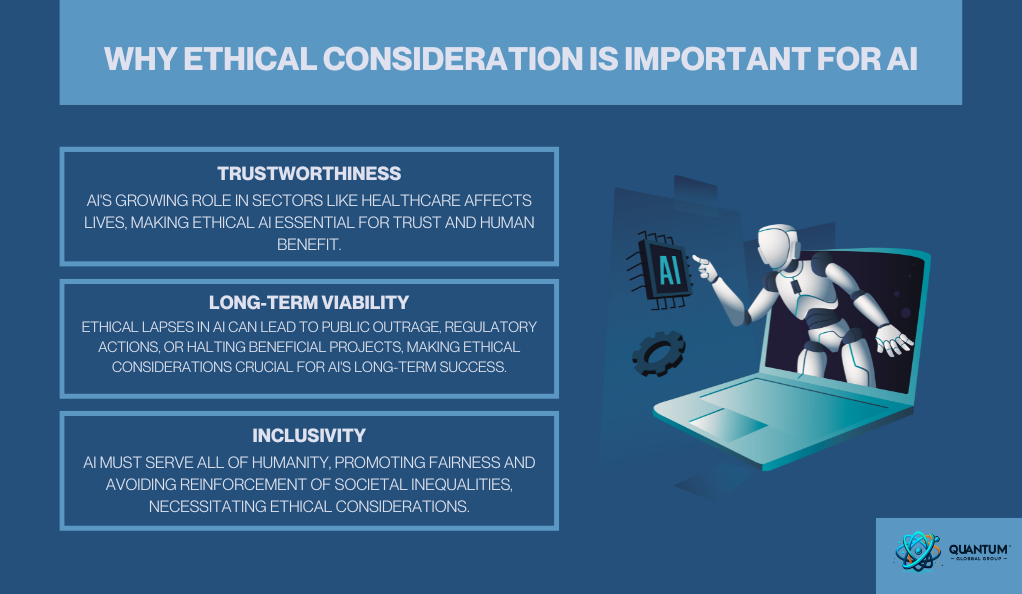

Why Ethical Consideration is Important for AI

- Trustworthiness: As AI becomes more integrated into critical areas of society, such as healthcare and criminal justice, its decisions have direct impacts on human lives. Ethical AI fosters trust, ensuring that the technology works for humanity’s benefit.

- Long-Term Viability: Ethical missteps can lead to public backlash, regulatory crackdowns, or even the shelving of beneficial AI projects. Ethical considerations are not just moral but also practical, ensuring AI’s sustainable future.

- Inclusivity: AI should benefit all of humanity, not just a privileged few. Ethical considerations ensure AI systems are fair and do not perpetuate or exacerbate existing societal inequalities.

Key Ethical Dilemmas in AI

As the reach and capabilities of AI expand, so do the complexities of the ethical questions it presents. Some of these dilemmas are new, born out of the unique characteristics of AI, while others are age-old questions made more pressing by AI’s ubiquity. Here, we will explore a few of the most pressing ethical concerns surrounding AI.

Bias and Fairness: The Risks of Perpetuating Societal Prejudices

AI systems are often trained on vast datasets, which might carry biases present in society. As a result, these AI models can perpetuate or even exacerbate these biases.

- Example: A job recruitment AI trained on past hiring data might be biased against certain ethnic groups or genders, reflecting past unjust hiring practices.

Addressing AI bias requires a multi-faceted approach:

- Diverse Training Data: Ensuring that the data used to train AI systems is representative of all groups it will serve.

- Regular Audits: Periodic checks on AI outputs to identify and rectify biases.

- Stakeholder Participation: Involving individuals from varied backgrounds in the AI development process to gain multiple perspectives.

Privacy Concerns: Striking a Balance Between Utility and Individual Rights

As AI systems process vast amounts of personal data, there are genuine concerns about data misuse and breaches.

- Example: An AI system recommending movies might need to access one’s watch history, but should it also be allowed to analyze one’s personal messages to make recommendations?

To tackle these concerns:

- Data Minimization: Use only the data necessary for the AI task at hand.

- Differential Privacy: Implement techniques that allow AI systems to learn from data without revealing individual data points.

- Transparent Data Usage Policies: Ensure users are informed and have control over how their data is used.

Accountability: Who is Responsible When AI Goes Wrong?

As AI systems make decisions autonomously, assigning responsibility for mistakes becomes a challenge.

- Example: If a self-driving car causes an accident, is the fault with the car’s manufacturer, the software developer, the car’s owner, or the AI itself?

Solutions to the accountability dilemma:

- Clear Legislation: Laws need to be updated to clarify accountability in scenarios where AI systems operate autonomously.

- Explainable AI: Developing AI systems that can explain their decisions can help trace back mistakes to specific causes.

- Human Oversight: Ensuring that critical AI decisions are always subject to human review.

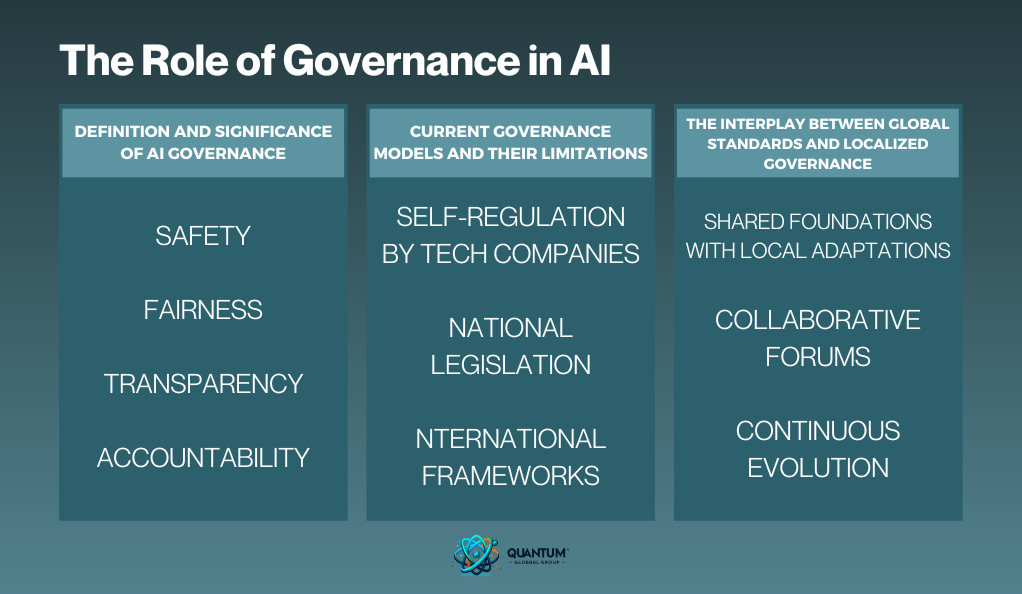

The Role of Governance in AI

Governance, in the context of AI, refers to the systems of rules, practices, and processes by which AI is directed and controlled. Just as governance structures exist in corporations to ensure accountability and ethical business practices, AI governance aims to ensure the ethical creation and deployment of AI. Here, we explore the nuances of AI governance and its growing importance.

Definition and Significance of AI Governance

AI governance is not just about placing restrictions; it’s about creating a conducive environment where AI can flourish responsibly. Effective governance ensures:

- Safety: Ensuring that AI behaves predictably and doesn’t harm humans.

- Fairness: Ensuring that AI systems operate without bias and serve all sections of society equitably.

- Transparency: Ensuring that the workings of AI systems are open and understandable.

- Accountability: Ensuring there are mechanisms to hold AI systems and their developers responsible.

Current Governance Models and Their Limitations

There’s a myriad of AI governance models proposed and in practice today, each with its strengths and limitations.

- Self-regulation by Tech Companies: Many leading tech firms have established their internal ethical guidelines. However, this approach often lacks external oversight, leading to potential conflicts of interest.

- National Legislation: Countries like the European Union with GDPR and China with its Data Security Law have enacted national regulations. These laws offer more extensive coverage but can sometimes be too restrictive or lack the flexibility to adapt to rapidly changing tech landscapes.

- International Frameworks: Global standards, like those proposed by the UN or WHO for specific sectors, can create consistency across countries but can be challenging to enforce.

The Interplay Between Global Standards and Localized Governance

While global standards offer a unified approach, AI governance needs to be sensitive to local cultures, economies, and societal structures. Thus, a balance is needed between global consistency and local relevance.

- Shared Foundations with Local Adaptations: Core ethical principles can be universally adopted, while implementation details are localized based on regional requirements.

- Collaborative Forums: Platforms where representatives from different countries discuss, debate, and develop governance models can ensure global harmonization while respecting local nuances.

- Continuous Evolution: Given the rapid pace of AI development, governance models need regular revisiting and revision. This ensures they remain relevant and effective.

AI Governance Across the Globe

One of the fascinating aspects of AI governance is witnessing its evolution across various regions, each influenced by its unique blend of culture, economy, and historical precedents. In this section, we’ll draw comparisons between the AI governance models of select regions to provide a snapshot of the global landscape.

United States: Market-driven Innovation with Oversight

The U.S. strategy for AI governance stands out for its focus on fostering innovation and maintaining leadership in the technology sector, primarily influenced by:

- Private Sector Leadership: Tech giants like Google, Amazon, and Microsoft have been at the forefront, shaping both the development and governance of AI technologies.

- Federal Initiatives: While there is no comprehensive federal law on AI, there are initiatives such as the American AI Initiative that emphasize the importance of AI for national leadership.

- Focus on Ethics and Guidelines: Various American universities and think-tanks are actively working on AI ethics, often in collaboration with tech companies.

European Union: Privacy and Human Rights at the Forefront

The European strategy for AI emphasizes protecting individual rights, with a particular emphasis on ensuring data privacy.

- GDPR: The General Data Protection Regulation has set stringent standards for data privacy, impacting AI systems that process personal data.

- Human-centric AI: The EU emphasizes AI that respects fundamental rights, including transparency, fairness, and accountability.

- Regulation Proposals: The EU is actively working on regulatory proposals that aim to set standards for high-risk AI systems across sectors.

China: State-led Drive for Global AI Dominance

China’s approach to AI governance intertwines its ambition to be a global AI leader with its unique political and social structures.

- National AI Development Plan: A roadmap that outlines China’s strategy to lead in AI by 2030, covering research, industry, and policy measures.

- Tightening Data Controls: China’s Data Security Law and Personal Information Protection Law provide a framework for data protection, impacting AI developments.

- Public-Private Collaboration: Tech giants like Alibaba and Tencent collaborate closely with the state on various AI initiatives, blurring the lines between the private sector and government.

Societal Implications of AI Ethics and Governance

The interplay between AI ethics and governance doesn’t operate in a vacuum. These considerations directly impact society, shaping how we interact with technology, and, in turn, how technology affects our lives. Let’s explore some of the profound societal implications of these discussions.

Economic Shifts: Job Transformations and Economic Disparities

- Job Evolution: As AI systems become more prevalent, certain job roles, especially repetitive tasks, are at risk of automation. However, new roles are emerging in AI design, implementation, and oversight.

- Economic Disparities: In the absence of effective governance, AI’s advantages might primarily benefit tech giants and specific industries, potentially widening the wealth divide.

- Global Economic Balance: Nations that lead in AI research and deployment may gain significant economic advantages on the global stage, reshaping global economic dynamics.

Social Dynamics: Interpersonal Relationships and Trust in Systems

- Over-reliance on AI: As AI systems become more integrated into daily life, there’s a potential risk of over-reliance, leading to diminished human judgment and interpersonal interactions.

- Trust in Systems: Ethical mishaps can erode public trust not just in AI systems but in institutions that deploy them. Effective governance can help in building and maintaining this trust.

- AI in Social Media: AI-driven algorithms in social media can influence public opinion, impact mental health, and even sway elections. Ethical considerations are vital to prevent misuse and ensure they foster genuine human connections.

Cultural and Philosophical Impacts: Redefining What It Means to be Human

- Human Identity: As AI systems become more advanced, questions arise about human uniqueness. What distinguishes human intelligence and consciousness from AI?

- Ethical Relativism: As AI is developed globally, whose ethical standards should it adhere to? This brings up debates about cultural relativism and universal ethical principles.

- Moral Agency: Can an AI system ever have moral agency? This philosophical question probes the limits of AI and its role in moral and ethical dilemmas.

Strategies for Promoting Ethical AI

As we navigate the complex terrain of AI’s potential and pitfalls, promoting its ethical use becomes paramount. But how can this be achieved systematically? Here, we’ll explore a series of strategies that can be employed by individuals, businesses, and governments to ensure the ethical deployment of AI.

Education and Awareness

- Curriculum Integration: Introduce AI ethics early in educational systems, ensuring that the next generation of developers, users, and policymakers understand the ethical implications.

- Workshops and Training: Regularly organize training sessions for AI professionals, ensuring they remain updated about the evolving landscape of AI ethics.

- Public Awareness Campaigns: Use media and public platforms to educate the general public about the benefits and risks of AI, promoting informed discourse.

Stakeholder Engagement

- User Feedback Systems: Establish platforms where users can provide feedback on AI tools and algorithms, ensuring a continuous feedback loop for improvements.

- Cross-sector Collaboration: Encourage dialogue between tech companies, governments, NGOs, and academics to develop holistic ethical AI guidelines.

- Ethics Committees: Form committees within organizations, comprising members from diverse backgrounds, to review and guide AI projects from an ethical perspective.

Technological Innovations

- Ethics by Design: Actively incorporate ethical principles in AI systems from the beginning, instead of treating them as a secondary consideration.

- Fairness Tools: Develop and implement tools that can assess and ensure the fairness of AI algorithms in real-time.

- Transparent Algorithms: Strive for algorithmic transparency, allowing both experts and the general public to understand and trust AI systems.

Regulatory and Policy Measures

- Ethical Standards: Governments and international bodies can develop and promote standards for ethical AI, similar to standards in other industries.

- Accountability Frameworks: Establish clear frameworks that determine accountability in cases where AI systems malfunction or cause harm.

- Certification Programs: Introduce certification programs for ethical AI, ensuring that systems deployed in public and private sectors meet predefined ethical criteria.

Encouraging Ethical Business Practices

- Ethical Audits: Regularly conduct third-party audits to assess the ethical implications of AI systems within organizations.

- Incentives for Ethical AI: Governments can provide tax breaks or other incentives to companies that adhere to ethical AI guidelines.

- Consumer-driven Ethics: Educate consumers to demand ethical AI products and services, driving businesses to prioritize ethics due to market demand.

Conclusion

In the realm of technology, few innovations have been as transformative or as contentious as artificial intelligence. As we’ve seen, the ethical implications of AI stretch far beyond mere code, impacting the fabric of society, shaping economies, and influencing personal beliefs and values.

The discussions around AI ethics and governance aren’t just academic exercises; they’re urgent calls to action. As AI continues to evolve and permeate every facet of our lives, its ethical deployment becomes a non-negotiable mandate. Our collective decisions today will set the trajectory for how AI impacts future generations.