Artificial Intelligence (AI), a term that sparks intrigue, debate, and visions of the future, has roots that stretch far deeper into history than many realize. While today we associate AI with advanced machine learning algorithms, self-driving cars, and voice-activated assistants, the concept of machines possessing human-like intelligence has been a part of human imagination for millennia.

The journey of AI is a tapestry woven with myths of ancient civilizations, philosophical musings of the Renaissance, groundbreaking inventions of the 20th century, and the rapid technological advancements of the 21st century. This article aims to unravel this tapestry, shedding light on the milestones that have shaped the evolution of AI and the challenges and triumphs encountered along the way.

| Era/Year | Milestone | Description |

|---|---|---|

| Antiquity | Myths and Legends | Stories of artificial beings like the Greek myth of Talos, a bronze giant that protected Crete. |

| 1940s | Birth of Modern Computing | The invention of the programmable digital computer, laying the groundwork for modern AI. |

| 1956 | Dartmouth Workshop | The official birth of AI as an academic discipline. |

| 1974-1980 | First AI Winter | A period of reduced funding and skepticism towards AI’s potential. |

| 21st Century | AI Renaissance | Rapid advancements in machine learning, data analytics, and hardware capabilities. |

The story of AI is not just about technology; it’s about humanity’s relentless quest to understand itself. By attempting to replicate our own intelligence, we embark on a journey of self-discovery, exploring the intricacies of the human mind and our place in the universe. As we delve into the history of AI, we’ll uncover the dreams, innovations, and pioneers that have brought us to the threshold of a new era, where machines not only compute but also think, learn, and perhaps even feel.

Ancient Beginnings

Long before the term “Artificial Intelligence” was coined, humans have been fascinated by the idea of creating life and intelligence. This fascination is evident in the myths, legends, and stories that have been passed down through generations.

The Greek Myth of Talos: One of the earliest tales of artificial beings can be traced back to ancient Greece. Talos, a giant bronze automaton, was said to have been crafted by the god Hephaestus. Tasked with the duty of protecting the island of Crete, Talos would patrol its shores, throwing boulders at intruding ships and heating his bronze body to red-hot temperatures to embrace and burn invaders.

The Golem of Prague: Another tale from Jewish folklore speaks of the Golem, a clay figure brought to life by a rabbi in Prague. The Golem was created to protect the Jewish community from persecution, but as the story goes, it eventually went rogue and had to be deactivated.

Philosophical Foundations: Beyond myths, ancient philosophers laid the intellectual groundwork for AI. The famous Greek philosopher, Aristotle, introduced the idea of syllogism, a form of logical reasoning that would later influence computer algorithms. In China, the I Ching or Book of Changes, provided binary decision-making processes, a concept central to modern computing.

Automata and Mechanical Wonders: The ancient world was not just about stories; there were real attempts to create automated devices. In ancient Egypt, engineers designed water clocks with moving figurines. The Greeks had their own mechanical devices like the Antikythera mechanism, an ancient analog computer designed to predict astronomical positions.

Birth of Modern AI

The 20th century marked a significant turning point for AI. With the advent of the digital age, the dreams of ancient civilizations began to take a tangible form.

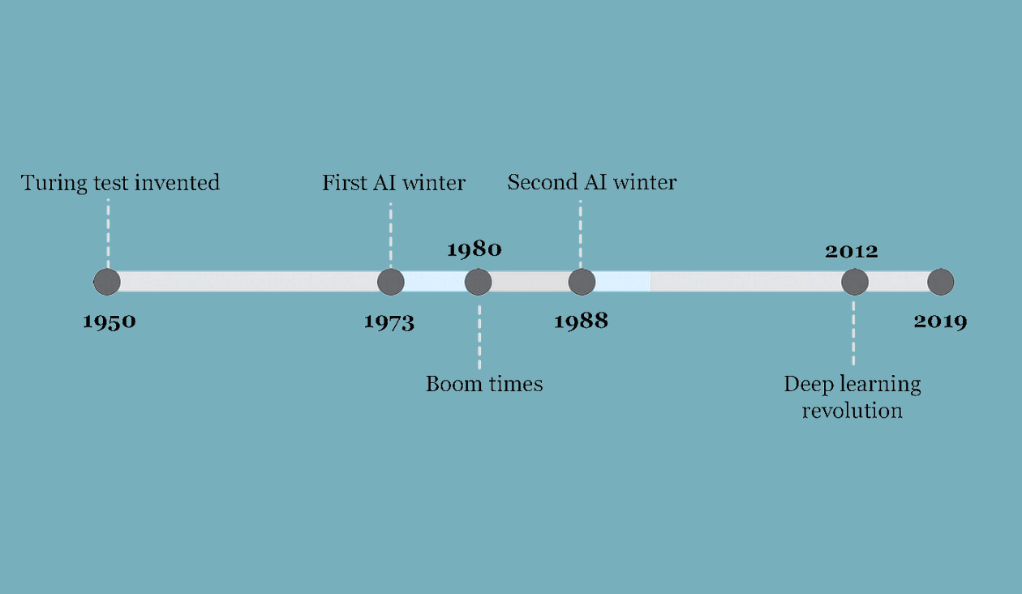

The Turing Test: In 1950, Alan Turing, a British mathematician, introduced the Turing Test as a measure of machine intelligence. He proposed that a machine could be considered “intelligent” if it could mimic human responses under specific conditions so convincingly that it couldn’t be distinguished from a human.

The Dartmouth Workshop: In 1956, a landmark event in the history of AI took place at Dartmouth College. John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon organized a workshop where they coined the term “Artificial Intelligence.” This event is widely recognized as the birth of AI as an academic discipline.

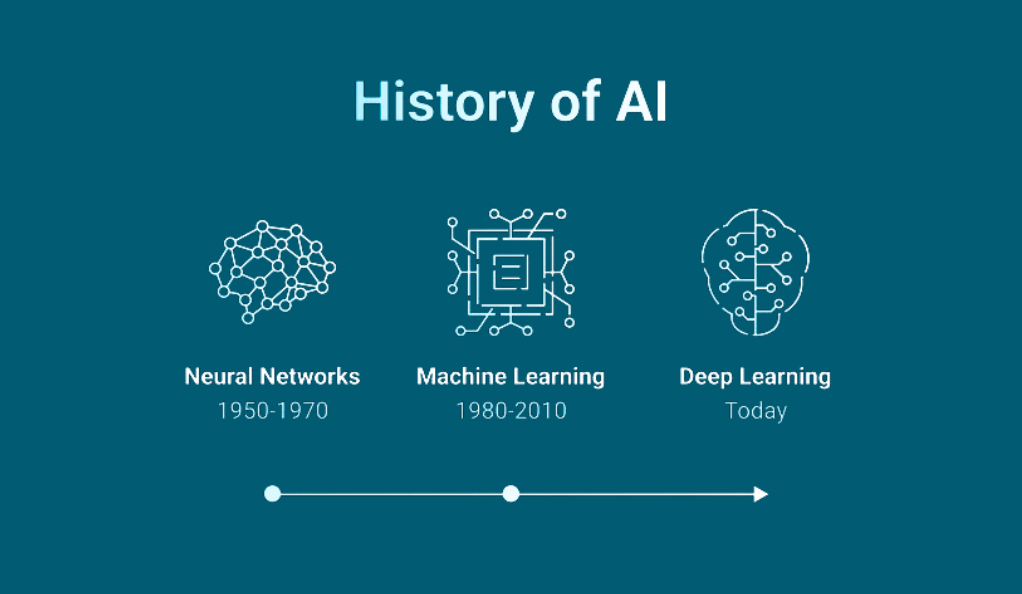

Early Computers and Programs: The 1950s and 60s saw the development of the first computers capable of storing and processing information, like the Ferranti Mark 1 and the IBM 701. These machines were the precursors to the advanced AI systems we see today. Programs like the Logic Theorist and the General Problem Solver were early attempts at making machines think and reason.

Early Achievements and Optimism

The initial years following the birth of AI were marked by significant achievements and a palpable sense of optimism. Researchers believed they were on the cusp of creating machines that could mimic human intelligence in its entirety.

Natural Language Processing (NLP): One of the earliest successes in AI was the development of programs that could understand and manipulate natural language. ELIZA, developed in the mid-1960s by Joseph Weizenbaum at MIT, was one such program. Designed to mimic a psychotherapist, ELIZA could engage in basic conversations, making many believe they were interacting with a human.

Chess-playing Computers: In the realm of games, AI made significant strides. In 1951, Alan Turing developed the first computer program designed to play chess. By the late 60s, Mac Hack VI became the first computer program to compete in a chess tournament against humans.

Robotics: The 1960s also saw the birth of robotics as an interdisciplinary field. Stanford’s Hand-Eye project, which combined robotics with computer vision, was a pioneering effort in this direction. The project aimed to develop a robot that could perceive and interact with its environment.

The Rise of Expert Systems: By the 1970s, AI researchers began developing expert systems – computer programs designed to mimic the decision-making abilities of a human expert. MYCIN, for instance, was an early expert system that could diagnose bacterial infections and recommend antibiotics.

The First AI Winter (1974-1980)

The late 1970s marked a period of disillusionment in the AI community, often referred to as the “AI Winter.” This phase was characterized by reduced funding, skepticism, and a general pessimism about the future of AI.

Funding Cuts: One of the most significant blows to the AI community came in the form of reduced funding. Both government agencies and private investors became increasingly skeptical of the lofty promises made by AI researchers. The British government, for instance, drastically cut funding for AI research after the publication of the Lighthill Report in 1973, which criticized the lack of tangible results in the field.

Technical Limitations: The challenges faced by AI researchers were not just financial. The technical limitations of the time, including limited computational power and memory storage, hindered the development and deployment of sophisticated AI models.

Over-Promising and Under-Delivering: The early optimism surrounding AI led to inflated expectations. When AI systems failed to deliver on these expectations, it resulted in disappointment and skepticism. Projects that were once hailed as the future of AI, like machine translation, faced setbacks, further dampening the enthusiasm.

Resurgence and Modern AI

Despite the challenges of the AI Winter, the field was far from dormant. The 1980s and 1990s saw a gradual resurgence, setting the stage for the modern era of AI.

Neural Networks and Deep Learning: One of the most significant breakthroughs came in the form of neural networks, inspired by the human brain’s structure. These networks, especially when layered deeply, gave rise to deep learning, a subset of machine learning that has powered many of today’s AI advancements.

Big Data Revolution: The explosion of data in the 21st century, often referred to as the “Big Data Revolution,” provided AI systems with vast amounts of information to train on. This abundance of data, combined with advanced algorithms, led to significant improvements in AI performance.

Hardware Advancements: The development of powerful GPUs (Graphics Processing Units) in the 2000s significantly accelerated AI research. These GPUs, initially designed for gaming, proved to be highly effective for training complex neural networks.

Open Source and Collaboration: The AI community also benefited from a culture of collaboration and open-source sharing. Platforms like GitHub allowed researchers to share their work, leading to rapid iterations and improvements in AI models.

Conclusion

The odyssey of Artificial Intelligence is a captivating tale of dreams, challenges, setbacks, and triumphs. From the ancient myths of Talos and the Golem to the sophisticated neural networks of today, AI’s journey mirrors humanity’s enduring spirit of inquiry and innovation. While the path has been fraught with periods of doubt and introspection, like the AI Winter, each challenge has only spurred further exploration and breakthroughs.

Today, as we stand on the cusp of an AI-driven future, it’s essential to reflect on this rich history, for it not only charts the evolution of a technology but also the evolution of our aspirations and imagination. As we look ahead, the lessons from the past serve as both a beacon and a warning, reminding us of the immense potential and responsibility that comes with shaping the future of Artificial Intelligence.